The abundance of radio data should make it straightforward to extend the encoder to other languages.This chapter examines the mood systems of Niger-Congo languages. We also hope to expand its capabilities to more languages from the Niger-Congo family and beyond, so that literacy or ability to speak a foreign language are not prerequisites for accessing the benefits of technology. “Future work could expand its vocabulary to application domains such as microfinance, agriculture, or education. We also showed how this model can power a voice interface for contact management,” the coauthors wrote. “To the best of our knowledge, the multilingual speech recognition models we trained are the first-ever to recognize speech in Maninka, Pular, and Susu.

As the coauthors note, smartphone access has exploded in the Global South, with an estimated 24.5 million smartphone owners in South Africa alone, according to Statista, making this sort of assistant likely to be useful.

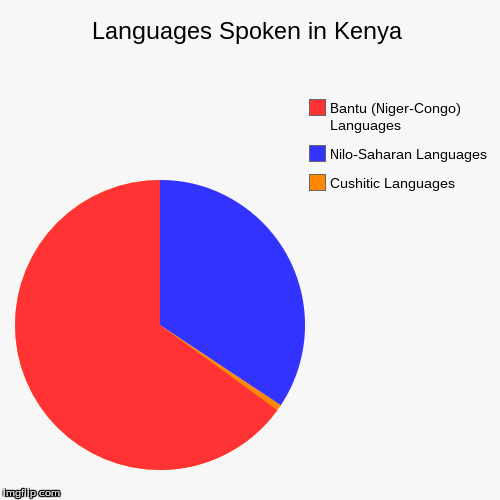

The assistant - which is available in open source along with the datasets - can recognize basic contact management commands (e.g., “search,” “add,” “update,” and “delete”) in addition to names and digits. Virtual assistantĪs a proof of concept, the researchers used WAwav2vec to create a prototype of a speech assistant. In one experiment with speech across French, Maninka, Pular, and Susu, the coauthors say that they achieved multilingual speech recognition accuracy (88.01%) on par with Facebook’s baseline wav2vec model (88.79%) - despite the fact that the baseline model was trained on 960 hours of speech versus WAwav2vec’s 142 hours. To this end, it uses attention functions instead of a recurrent neural network to predict what comes next in a sequence.ĭespite the fact that the radio dataset includes phone calls as well as background and foreground music, static, and interference, the researchers managed to train a wav2vec model with the West African Radio Corpus, which they call WAwav2vec. Created by Google researchers in 2017, the Transformer network architecture was initially intended as a way to improve machine translation. Wav2vec uses an encoder module that takes raw audio and outputs speech representations, which are fed into a Transformer that ensures the representations capture whole-audio-sequence information. To create a speech recognition system, the researchers tapped Facebook’s wav2vec, an open source framework for unsupervised speech processing. The broadcasts in the West African Radio Corpus span news and shows in languages including French, Guerze, Koniaka, Kissi, Kono, Maninka, Mano, Pular, Susu, and Toma. The West African Speech Recognition Corpus contains over 10,000 hours of recorded speech in French, Maninka, Susu, and Pular from roughly 49 speakers, including Guinean first names and voice commands like “update that,” “delete that,” “yes,” and “no.” As for the West African Radio Corpus, it consists of 17,000 audio clips sampled from archives collected from six Guinean radio stations. The researchers created two datasets, West African Speech Recognition Corpus and the West African Radio Corpus, intended for applications targeting West African languages. MetaBeat will bring together thought leaders to give guidance on how metaverse technology will transform the way all industries communicate and do business on October 4 in San Francisco, CA. But this week, researchers at Guinea-based tech accelerator GNCode and Stanford detailed a new initiative that uniquely advocates using radio archives in developing speech systems for “low-resource” languages, particularly Maninka, Pular, and Susu in the Niger Congo family.

Nonprofit efforts are underway to close the gap, including 1000 Words in 1000 Languages, Mozilla’s Common Voice, and the Masakhane project, which seeks to translate African languages using neural machine translation. This data deficit persists for several reasons, chief among them the fact that creating products for languages spoken by smaller populations can be less profitable. Yet in many countries, these people tend to speak only languages for which the datasets necessary to train a speech recognition model are scarce. Were you unable to attend Transform 2022? Check out all of the summit sessions in our on-demand library now! Watch here.įor many of the 700 million illiterate people around the world, speech recognition technology could provide a bridge to valuable information.

0 kommentar(er)

0 kommentar(er)